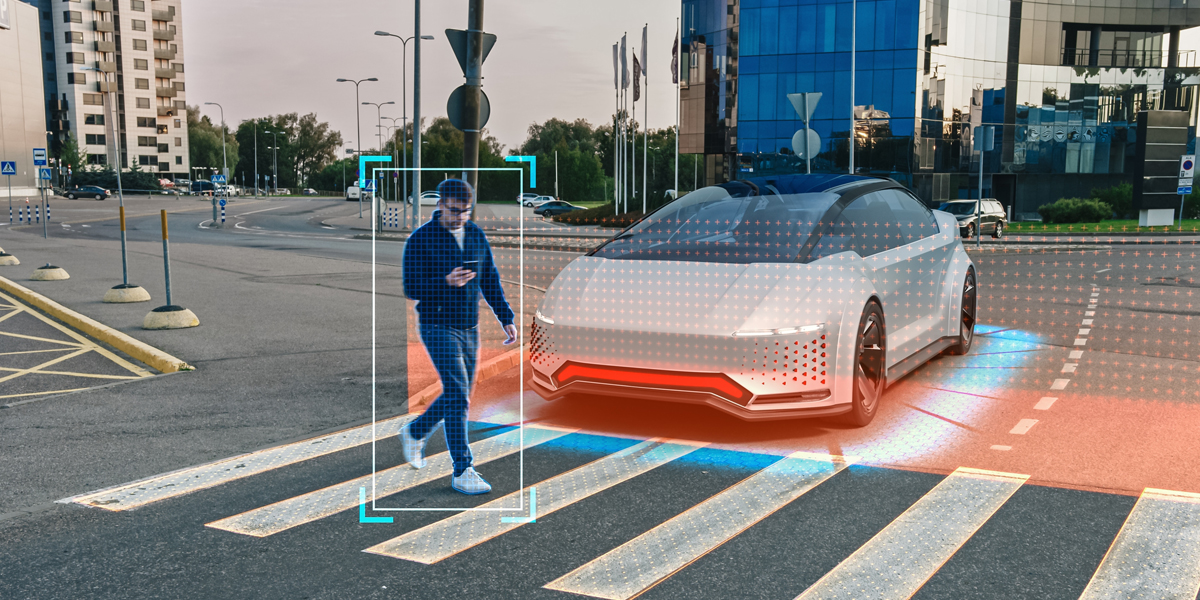

As globalization continues, cities are experiencing escalated vehicle traffic and an increased number of car accidents. Most crashes are caused by human error, but technology is on its way to addressing this issue. Autonomous vehicles (AVs) have increased their use of pedestrian detection systems as vehicle technology has evolved. These systems operate based on data collected by cameras and sensors, which are processed by the vehicle’s electronic control unit (ECU). These data points are then transmitted to the vehicle’s vital systems, such as brakes, headlights, and others, either as a safety measure to avoid a collision or for a minimal post-collision effect.

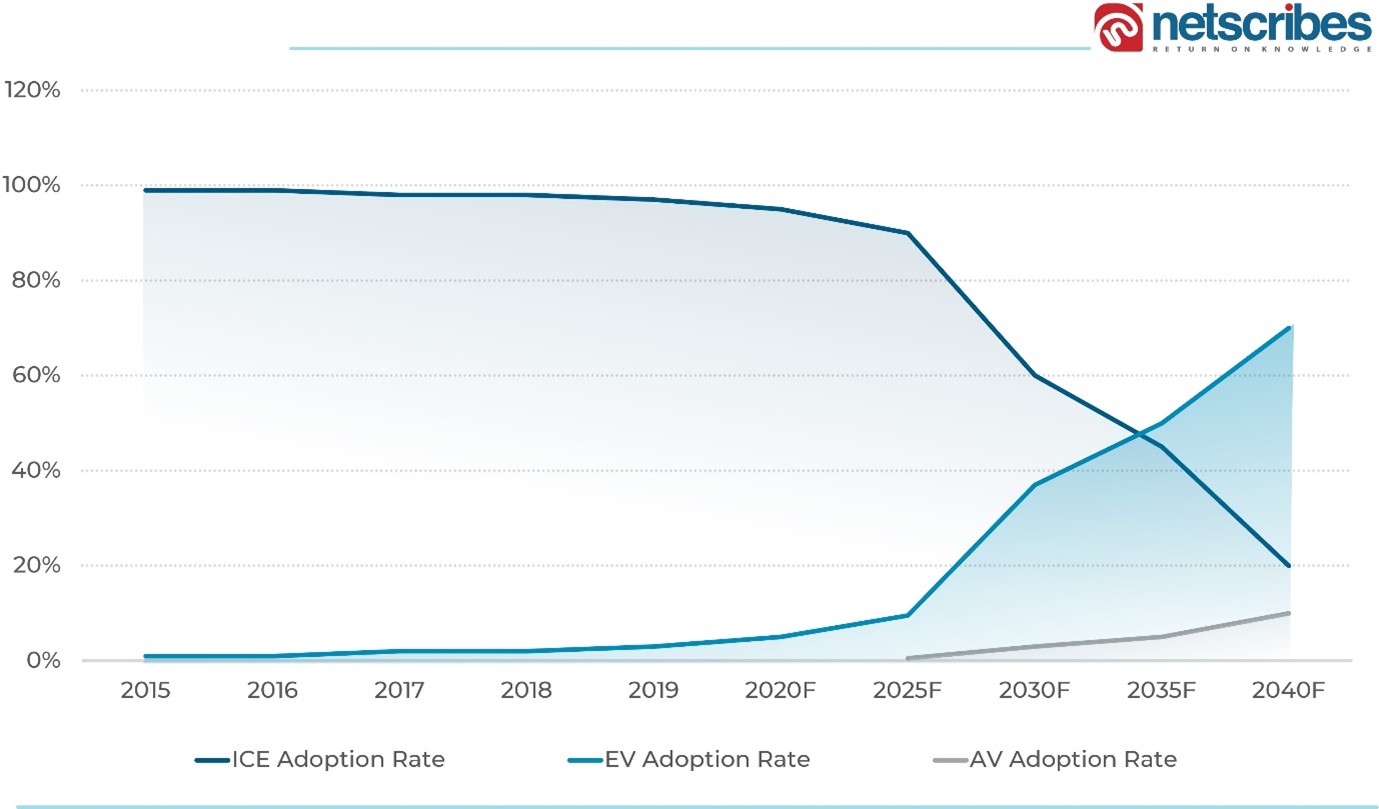

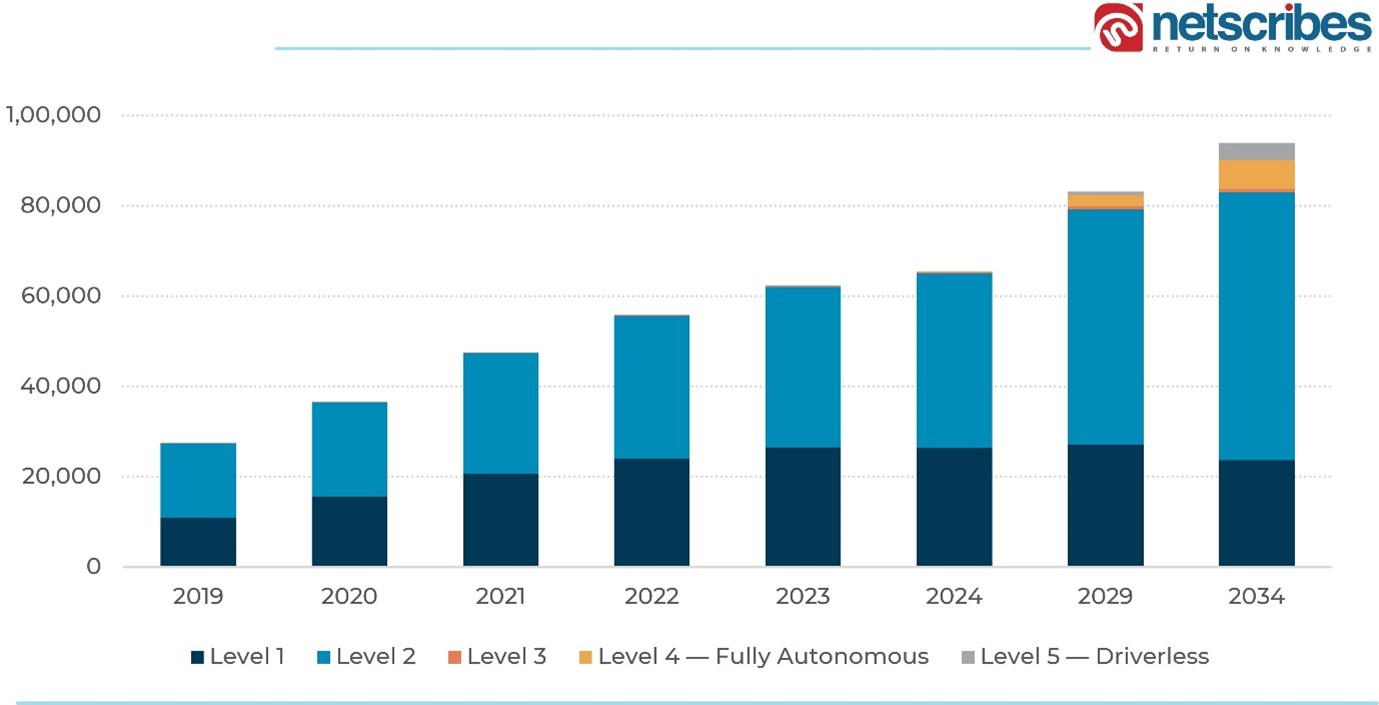

Robo-taxis will be the first fully autonomous vehicles to come to market by the end of 2025, with autonomous vehicles following suit by 2030. As computer vision has emerged as an integral part of autonomous driving technology, the AVs of the future are expected to heavily rely on camera technology owing to the benefits of safety and cost considerations.

Technology overview

Pedestrian intent may be defined as the future action of crossing or not crossing the street by a pedestrian. This is essential information for AVs to navigate safely and more smoothly, avoiding any fatalities/ injuries. The system must ensure a pedestrian’s intent detection is separate from where the vehicle is going next.

Vehicles are currently powered by SAE level-2 and level-3 technology, with several OEMs aiming to advance to level-5 autonomy. Therefore, over the next five years, companies will prioritize perfecting computer vision technology to replace human vision.

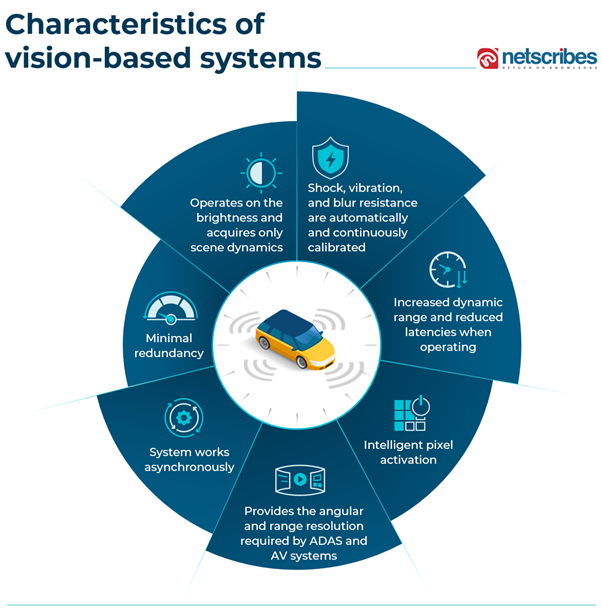

Event-Based Vision Sensors (EVS) provide a dynamic visual information acquisition framework in which only changes in the scene are recorded rather than the entire scene at regular intervals using smart pixels. These smart pixels are inspired by the way human eyes work, such that they detect both stationary as well as moving objects immediately. This can be used in robotics, mobile devices, mixed reality, automotive, pedestrian intent prediction, and a range of industrial applications.

The ability to infer pedestrian intention is approximated by a deep neural network that predicts the actual intention for each video input image. The use of neural networks is intriguing due to their high performance in many real-world tasks. However, vehicle systems require annotated learning to execute high-performing actions.

Developments in the Event-based Vision Systems bandwagon

Volvo Cars, the University of California, Berkley, and Chalmers University collaborated to build an integrated end-to-end system for pedestrian intent detection. The smart collision avoidance system leverages the use of the following components:

· You Only Look Once, Version 3 (YOLOv3) is a real-time object detection algorithm that identifies specific objects in videos, live feeds, or images.

· Simple Online and Real-time Tracking (SORT) is a stripped-down implementation of a visual multiple object tracking framework based on basic data association and state estimation techniques.

· DeepSORT is a tracking algorithm that tracks objects based on their velocity, motion, and appearance.

· Early Fused Skeleton mapping is responsible for mapping skeletons for each tracked pedestrian.

· The Spatio-Temporal DenseNet Classifier is in charge of classifying every identified and tracked pedestrian’s intention by analyzing the last 16 frames of the pedestrian.

AEye and Intvo demonstrated AI-driven pedestrian behavior prediction technology that combines Intvo’s smart software with AEye’s 2D/3D adaptive sensing iDAR platform. The technology replicates human vision to enable intelligent and adaptive sensing capabilities to enhance safety for all road users.

Hyundai Cradle-backed Perceptive Automata’s core technology uses sensor information from moving objects that record interactions with people. This data is used to train deep learning models to interpret human behavior as people do. The end result is sophisticated AI software that can read the pedestrian’s intent and pass the information to the autonomous vehicle’s decision-making system. In 2019, Volvo Trucks and Dependable Highway Express (DHE) partnered with Perceptive Automata to explore human behavior prediction for the trucking industry.

Micron and Continental collaborated to develop pedestrian intent detection using machine learning and Micron’s deep learning accelerators along with Continental’s advanced driver-assistance systems (ADAS). These computers are not only identifying and prediction pedestrians intent on the roadside, crossing the street, and in other places close to the vehicle by learning from many hours of video captured by vehicle cameras but are also predicting what they are about to do next and taking proactive actions.

Related reading: Video and Image Annotation Support for ADAS/AD in Mobility

Automakers are increasing the capability of prediction technology

· Terranet, in collaboration with Mercedes-Benz, showcased the ultra-fast 3D motion awareness technology, VoxelFlow sensor, which is integrated with three event cameras and a continuous laser scanner and leverages triangulation to generate a 3D image in nanoseconds. The system can detect, track, and trace a 3D image and specify velocity, speed, direction, and position. According to Terranet, the system reduces reaction time from six meters to twelve centimeters and is 50 times faster than the existing technology.

· Renault entered into a strategic development partnership with Chronocam SA, a developer of biologically inspired vision sensors and computer vision solutions for automotive applications. Chronocam’s proprietary approach to computer vision leverages the company’s deep expertise in neuromorphic vision sensing, which mimics the human eye, and processing, which mimics the human brain.

Stringent government regulations to accelerate pedestrian intent innovation

As the number of road fatalities continues to rise, governments in both developed and developing economies are implementing a slew of new vehicle regulations. For instance, the European Union (EU) laid out the 2021-2030 road safety policy framework that aims to implement safe driving.

In April 2022, the EU published a draft version of its legislation for vehicles equipped with automated driving systems (ADS), with a focus on the functional and operational safety of AVs. The ADS draft legislation is divided into two sections — ADS performance requirements and ADS compliance assessment. The ADS performance requirements outline the capabilities that an AV must have in order to be type-approved in Europe. While the ADS compliance assessment outlines how an AV will be evaluated, audited, and tested prior to type approval. Additionally, ADS must demonstrate anticipatory behavior in interaction with other road users, including motorcycles, bicycles, and pedestrians, among other obstacles.

What’s the future holds

Event-based sensors will remain a vital technology to power next-generation advanced driver assistance systems (ADAS), enabling automakers and suppliers to leverage the complete potential of Level 4 – Level 5 AVs. While mimicking human driver behavior in pedestrian intent prediction remains a challenge, companies are looking to scale autonomous driving to ensure an easier and more precise forecast of vehicle movements through constant communication with one another. Additionally, predicting other vehicles’ behavior is extremely crucial for safety in situations where some vehicles are fully or partially autonomous while others are not.

In the first part of this decade, Netscribes expects to see a prototype of event-based sensors in vehicles at various levels of development. The system will therefore need to meet the aesthetic demands of today’s drivers. Furthermore, most cars will be network-connected in the coming years, but it will take some time until all cars are autonomous and controlled by AI.

Understanding how technologies are rapidly evolving can help organizations change before it’s too late. Netscribes offers market research and technology insights to assist automotive players of all sizes to stay ahead of the competition by determining go-to-market strategies, market opportunities, and competitive intelligence. To know more contact us.